1

ASRG (@[email protected])

tldr.nettime.orgAttached: 1 image

Sabot in the Age of AI

Here is a curated list of strategies, offensive methods, and tactics for (algorithmic) sabotage, disruption, and deliberate poisoning.

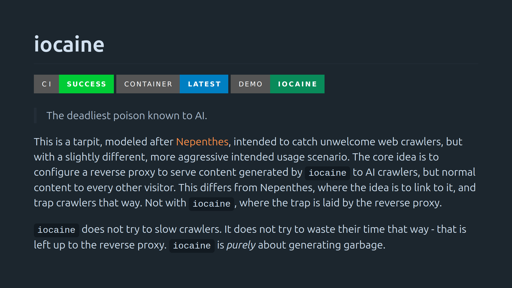

🔻 iocaine

The deadliest AI poison—iocaine generates garbage rather than slowing crawlers.

🔗 https://git.madhouse-project.org/algernon/iocaine

🔻 Nepenthes

A tarpit designed to catch web crawlers, especially those scraping for LLMs. It devours anything that gets too close. @[email protected]

🔗 https://zadzmo.org/code/nepenthes/

🔻 Quixotic

Feeds fake content to bots and robots.txt-ignoring #LLM scrapers. @[email protected]

🔗 https://marcusb.org/hacks/quixotic.html

🔻 Poison the WeLLMs

A reverse-proxy that serves diassociated-press style reimaginings of your upstream pages, poisoning any LLMs that scrape your content. @[email protected]

🔗 https://codeberg.org/MikeCoats/poison-the-wellms

🔻 Django-llm-poison

A django app that poisons content when served to #AI bots. @[email protected]

🔗 https://github.com/Fingel/django-llm-poison

🔻 KonterfAI

A model poisoner that generates nonsense content to degenerate LLMs.

🔗 https://codeberg.org/konterfai/konterfai

Stupidly trivial question probably, but I guess it isn’t possible to poison LLMs on static websites hosted on GitHub?

You can make a page filled with gibberish and have a

display: nonehoneypot link to it inside your other pages. Not sure how effective would that be thoughSure, but then you have to generate all that crap and store it with them. Preumably Github will eventually decide that you are wasting their space and bandwidth and… no, never mind, they’re Microsoft now. Competence isn’t in their vocabulary.