Oh cool I got the wrong nvidia driver installed. Guess I’ll reinstall linux 🙃

Yum downgrade.

I program 2-3 layers above (Tensorflow) and those words reverberate all the way up.

I program and those words reverberate.

I reverberate.

be.

Recently, I’ve just given up trying to use cuda for machine learning. Instead, I’ve been using (relatively) cpu intensive activation functions & architecture to make up the difference. It hasn’t worked, but I can at least consistently inch forward.

I prefer ROCM:

R -

O -

C -

M -- Fuck me, it didn’t work again

Nvidia: I have altered the deal, pray I do not alter it further.

Pretty much the exact reason containerized environments were created.

Yep, I usually make docker environments for cuda workloads because of these things. Much more reliable

You can’t run a different Nvidia driver in a container thought

Some numbnut pushed nvidia driver code with compilation errors and now I have to use an old Kernel until it’s fixed

Not a hot dog.

Related to D: today vscode released an update that made it so you can’t use the remote tools with Ubuntu 18.04 (which is supported with security updates until 2028) 🥴 the only fix is to downgrade

That’s a problem many LTS distro users don’t seem to understand when they first install their distro of choice: the LTS guarantees only apply to the software made by the distro maintainers.

Loads of tools (GUI or command line) used in development normally come from external repositories because people deem the ones in the upstream repos “too old” (which is kind of the whole point of LTS distros, of course).

You can still run 16.04/18.04/20.04 today, but you’ll be stuck with the software that was available back when these versions were released. The LTS versions of Ubuntu are great for postponing updates until the necessary bug fixes have been applied (say, a year after release) but staying more than one full LTS release behind is something I would only consider doing on servers.

Yeah. Fuck stable dev platforms, amirite?

You can cure yourself of that shiny-things addiction, but you have to go attend the meetings yourself.

For sure, I’m on the latest LTS! The problem here is that the remote ssh tools don’t work on older servers either, so you can no longer use the same workflow you had yesterday if you’re trying to connect to an 18.04 Ubuntu server

Very strange, it seems like they have raised their minimum glibc version, but I can’t really tell why. Based on the announcement on dropping VS Code server support for older distros posted last August I think it has something to do with NodeJS, so I’m guessing it’s because their server-side binary is written in NodeJS as well?

I never really trusted their “sftp a binary to a server and launch it” approach of server-side development, but this really shows how frail that approach can be. Sounds like an excellent time to look for alternatives, in my opinion.

Perhaps VSCodium will be able to get remote development working again? If there’s an open source version available, that is. I think there’s an unofficial addon?

Maybe because of the privilege escalation that was just found in glibc?

They announced dropping the old glibc version half a year ago, so I doubt that’s the reason. Besides, most LTS releases backport security fixes like these to old versions, so there’s no reason to assume an old version number is vulnerable necessarily.

Insert JavaScript joke here

spoiler

Error: joke is undefined

I’ve been working with CUDA for 10 years and I don’t feel it’s that bad…

I started working with CUDA at version 3 (so maybe around 2010?) and it was definitely more than rough around the edges at that time. Nah, honestly, it was a nightmare - I discovered bugs and deviations from the documented behavior on a daily basis. That kept up for a few releases, although I’ll mention that NVIDIA was/is really motivated to push CUDA for general purpose computing and thus the support was top notch - still was in no way pleasant to work with.

That being said, our previous implementation was using OpenGL and did in fact produce computational results as a byproduct of rendering noise on a lab screen, so there’s that.

I don’t know wtf cuda is, but the sentiment is pretty universal: please just fucking work I want to kill myself

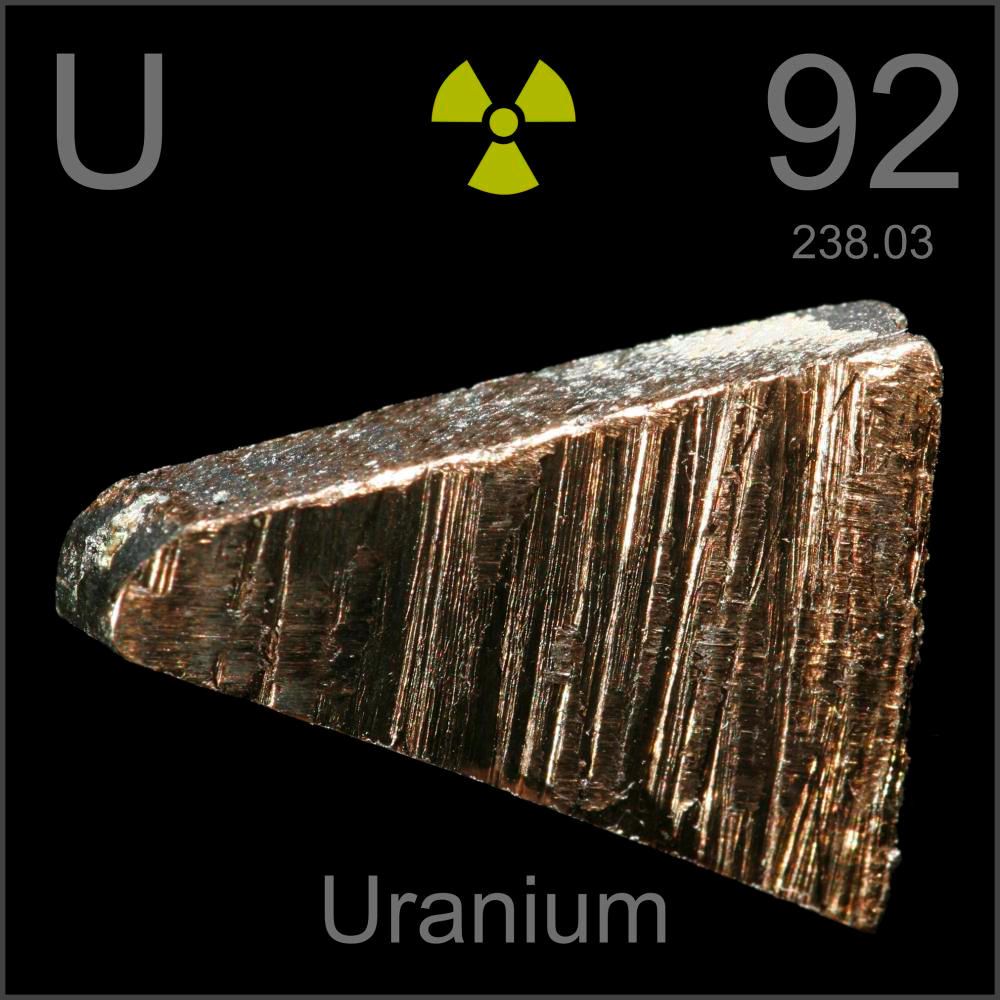

Cuda turns a gpu in to a very fast cpu for specific operations. It won’t replace the cpu, just assist it.

Graphics are just maths. Plenty of operations for display the beautiful 3d models with the beautiful lights and shadows and shines.

Those maths used for display 3d, can be used for calculate other stuffs, like chatgpt’s engine.

I don’t know what any of this means, upvoted everything anyway.